SABnzbd - Basic Setup

This basic example is based on the use of docker images

Keep in mind the path are setup so it works with hardlinks and instant moves.

More info HERE

Bad path suggestion

The default path setup suggested by some docker developers that encourages people to use mounts like /movies, /tv, /books or /downloads is very suboptimal and it makes them look like two or three file systems, even if they aren’t (Because of how Docker’s volumes work). It is the easiest way to get started. While easy to use, it has a major drawback. Mainly losing the ability to hardlink or instant move, resulting in a slower and more I/O intensive copy + delete is used.

But you're able to change this, by not using the pre-defined/recommended paths like:

/downloads=>/data/downloads,/data/usenet,/data/torrents/movies=>/data/media/movies/tv=>/data/media/tv

Note

Settings that aren't covered means you can change them to your own liking or just leave them on default.

I also recommend to enable the Advanced Settings on the top right.

General

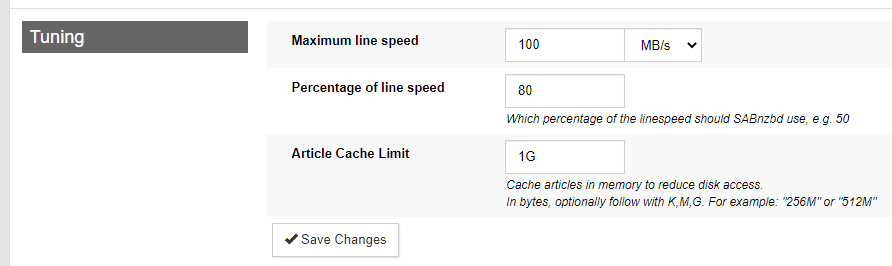

Tuning

I recommend setting a sane maximum speed and then limiting below that, to keep your internet connection happy. You can use Settings => Scheduling to toggle the limit on and off based on time, slowing it down while your family is using the internet and speeding it up at night when they're not.

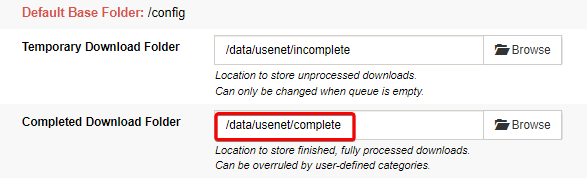

Folders

User Folders

Settings => Folders => Users Folders

Here you setup your download path/location.

ATTENTION

- You set your download location in your download client

- Your download client ONLY downloads to your download folder/location.

- And you tell Radarr where you want your clean media library

- Starr Apps import from your download location (copy/move/hardlink) to your media folder/library

- Plex, Emby, JellyFin, or Kodi should ONLY have access to your media folder/library

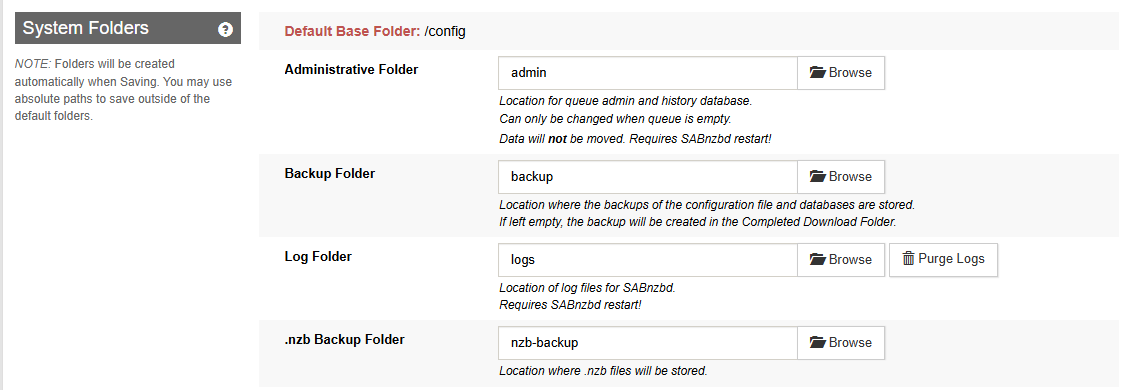

System Folders

Settings => Folders => System Folders

Starting from 4.3.x+ SABnzbd has a hidden (archive) history.

Using the .nzb Backup Folder is still recommended as it is useful for dupe detection (hash matching) or if you need to retry something from the past.

The default is empty, I picked history because it is easy. It'll end up in the /config folder for Docker, which isn't crazy... but this is only compressed nzb files, so it can end up pretty big. The choice is yours what you prefer.

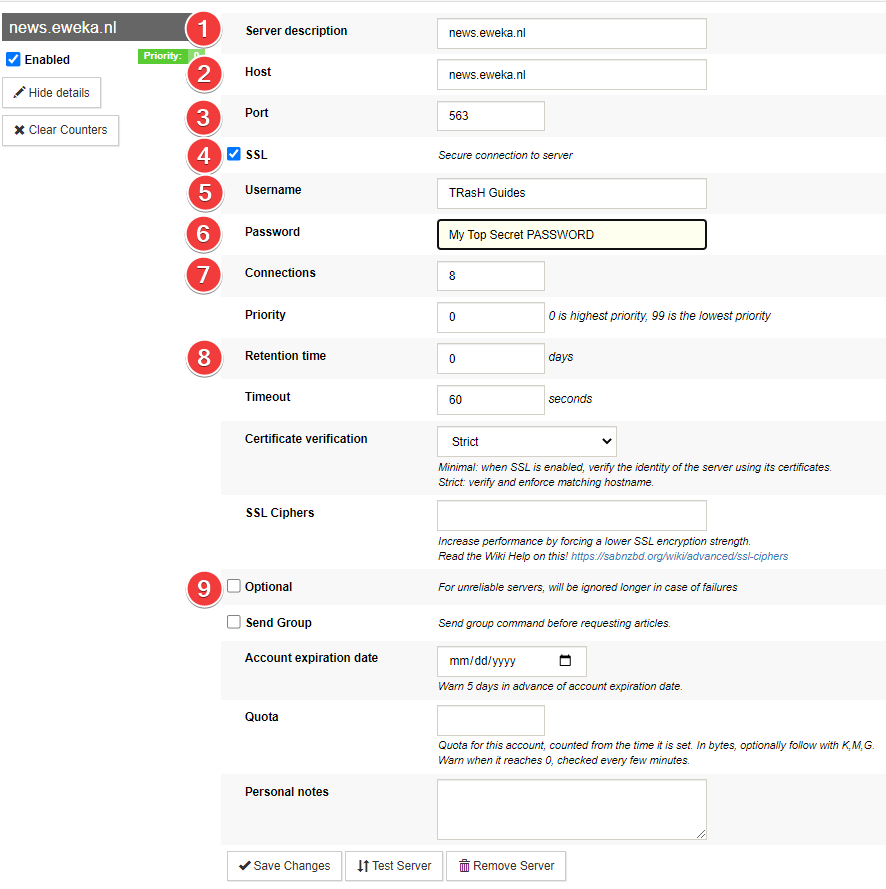

Servers

Settings => Servers => Add Server

USP = Usenet Service Provider

- Server description.

- The hostname you get from your USP.

- The port that you get and can use from your USP.

- Make sure you enable

SSLso you get a secure connection to the USP. - Username that you got or created with your USP.

- Password you got or created with your USP

- Use the lowest possible number of connections to reach your max download speed +1 connection.

- How long the articles are stored on the news server.

- For unreliable servers, will be ignored longer in case of failures

Categories

Settings=> Categories

Covered and fully explained in SABnzbd - Paths and Categories

Switches

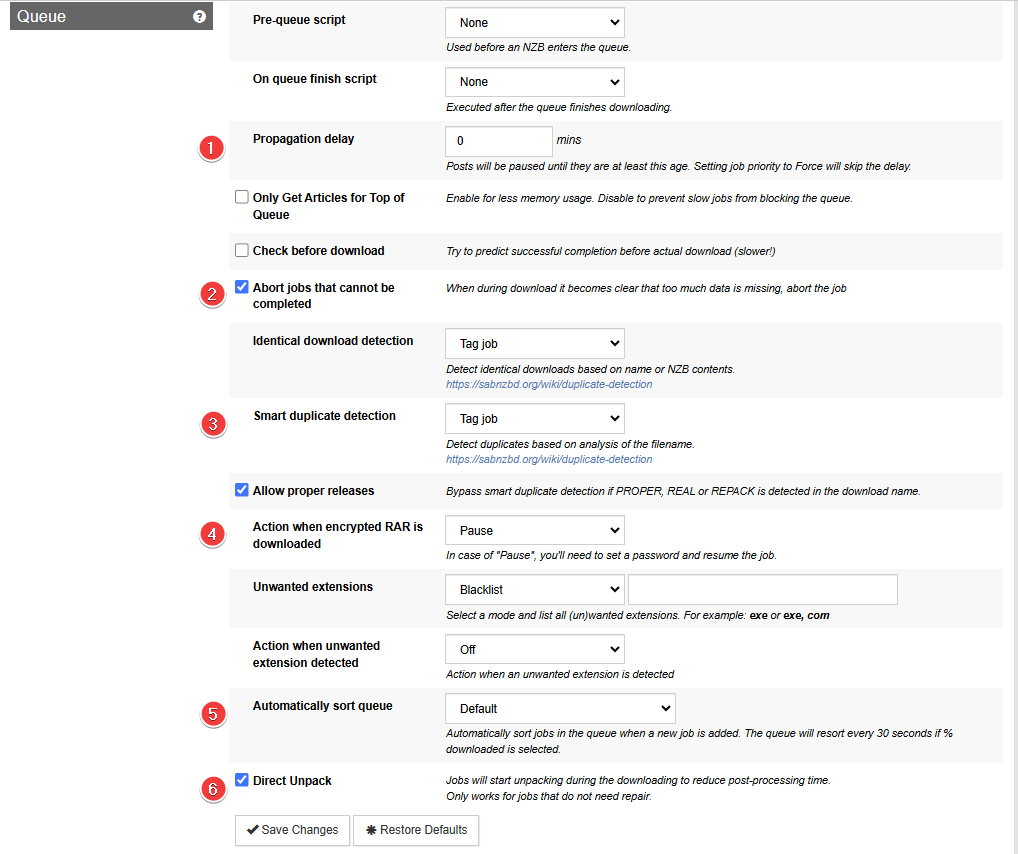

Queue

- If you have good indexers that get the nzb from the post, not generated, then you may want the Propagation delay set to 5 minutes (so you're not trying to grab an nzb right at posting). If you're not getting it from the same server as the poster used, you might wrongly have articles missing (since it hasn't necessarily propagated to your server yet) or if you use a reseller it may take them longer for them to get it from their upstream.

- When it becomes clear during downloading that too much data is missing, abort the job to make sure Sonarr/Radarr gets the notification so it can look for another release.

- Since we have the .nzb history folder, you can decide what you want to do here w/ duplicate downloads. Mine is set to Tag job, but Pause or Fail job may make sense too.

- In case of "Pause", you'll need to set a password and resume the job. or you set it to "Abort" and Sonarr/Radarr can look for another release.

- This should be set to the default unless you know what you are doing. Suppose you have a decent size queue, for example, in that case, you have sab sort every 30s, which could cause spikes in CPU, let alone shuffling jobs around that may be in the middle of actions. If this results in the jobs' order moving to the front it could cause that job to take even longer to extract/stall while waiting for the next update; as with sab, by default, you only have 3 unpackers going simultaneously (configurable).

- If your hardware isn't up to snuff, including cpu and/or io performance, disabling Direct Unpack and/or enabling Pause Downloading During Post-Processing can help. Defaults are fine for most hardware though.

Post processing

Settings => Switches => Post processing

- If your hardware isn't up to snuff, including cpu and/or io performance, disabling Direct Unpack and/or enabling Pause Downloading During Post-Processing can help. Defaults are fine for most hardware though.

- This should be set off if you have decent internet. The amount of time spent to grab pars, if needed for verification/repair, is trivial to the time that a repair might run and fail to realize it needs more pars, and grab the next part, then retry.

- It is your choice if you want to enable this option. It's usually an easy check and does provide benefits if the job doesn't have par2 files, as not every release has a par-set or SFV file. Generally speaking, if we're talking about scene releases, things should have both but this depends on how it's posted and how the indexer is generating the nzb. SFV is commonly used and a basic crc32 checksum is better not knowing if the file is good. Parsing an SFV file and checking the files' integrities takes very little resources. This may seem redundant given that par's checks would also handle this, however, the ease with which the check is done makes the downside almost non-existent.

- Only unpack and run scripts on jobs that passed the verification stage. If turned off, all jobs will be marked as Completed even if they are incomplete.

- Unpack archives (rar, zip, 7z) within archives.

- This can help with subs that are in folders in the rar because sonarr/radarr don't look in sub-folders.

- Best to leave this disabled and let the Starr apps handle this since it looks at runtime and makes a much more intelligent decision if its a sample compared to what SABnzbd uses.

- Helps with de-obfuscation especially invalid file extensions

Sorting

Settings => Sorting

Special

Rarely used options. Don't change these without checking the SABnzbd Wiki first, as some have serious side-effects. The default values are between parentheses.

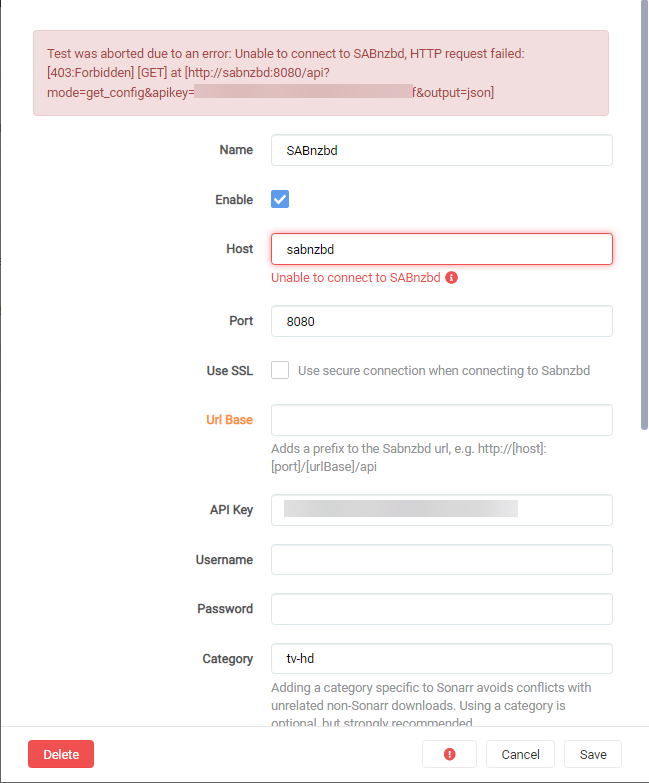

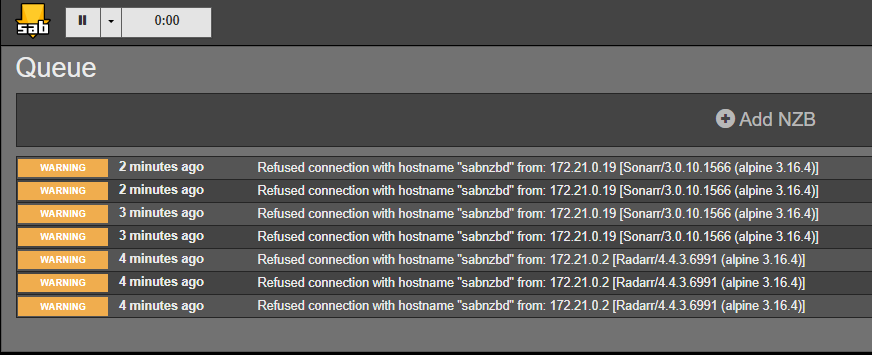

Unable to connect to SABnzbd

If you're trying to connect your Starr apps to SABnzbd and you're getting a error like Unable to connect to SABnzbd after clicking on Test.

And it SABnzbd you will see something like this.

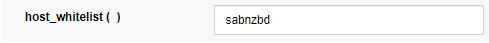

Then go in to Settings => Special => Values.

Scroll down to host_whitelist ( ) and enter your docker container name and or your domain name.

Example: sabnzbd.domain.tld, <container name >

Recommended Sonarr/Radarr Settings

The following settings are recommended to for Sonarr/Radarr, else it could happen that Sonarr/Radarr will miss downloads that are still in the queue/history. Being that Sonarr/Radarr only looks at the last xx amount in the queue/history.

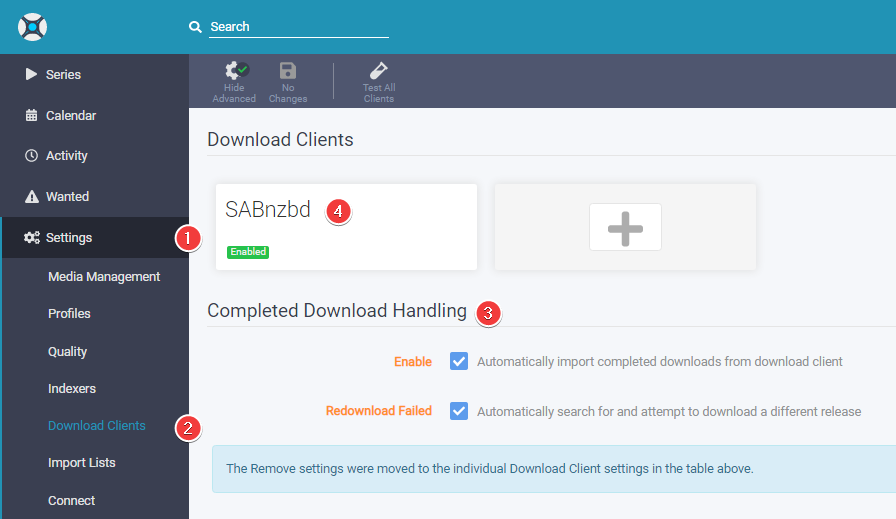

Sonarr

Sonarr - [Click to show/hide]

Settings => Download Clients

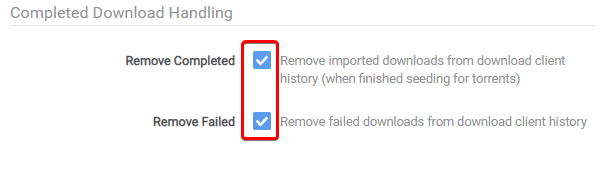

Make sure you check both boxes under Completed Download Handling at step 3.

Select SABnzbd at step 4 and scroll down to the bottom of the new window where it says Completed Download Handling and check both boxes.

Radarr

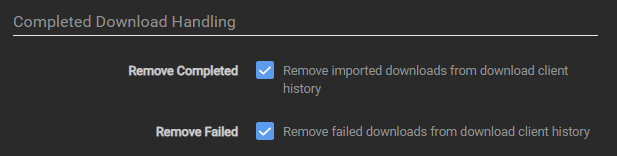

Radarr - [Click to show/hide]

Settings => Download Clients

Make sure you check both boxes under Completed Download Handling at step 3,

and both boxes under Failed Download Handling at step 4.

Select SABnzbd at step 5 and scroll down to the bottom of the new window where it says Completed Download Handling and check both boxes.

Thanks to fryfrog for helping me with the settings and providing the info needed to create this Guide.

Questions or Suggestions?